解决dinky0.7.4部署安装过程中出现的8个异常问题

0x00 生态版本

| flink | dinky | hdfs |

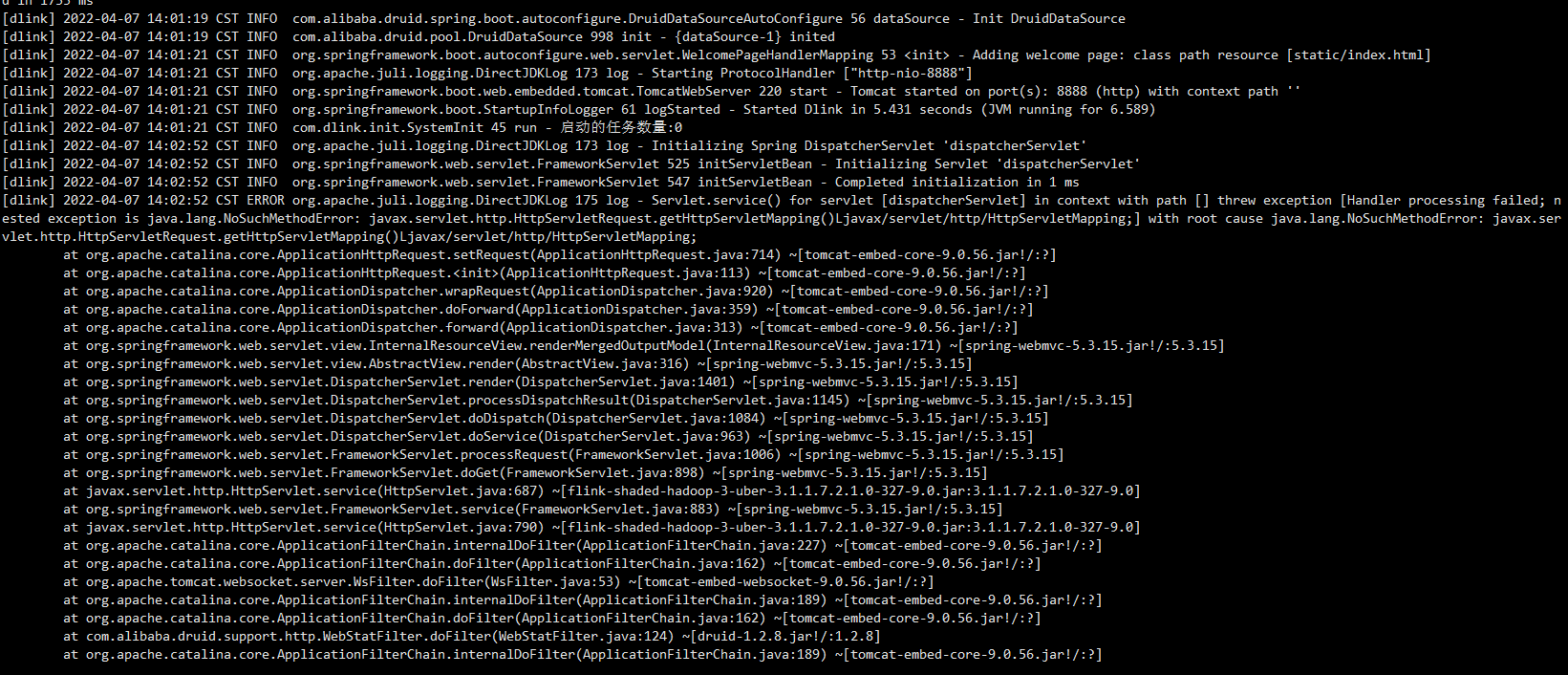

|---|---|---|

| 1.17.1 | 0.7.4 | 3.1.1 |

0x01 问题列表

1.Flink on Yarn HA高可用,配置hdfs依赖,无法识别HDFS高可用访问地址别名,在Perjob和application模式,提交任务,出现异常信息

解决办法:

-

方案一

升级 Dinky 至 0.6.2 及后续版本。 详见:https://github.com/DataLinkDC/dlink/issues/310

我的版本是0.7.4,依然报错,说明此方法无效。

-

方案二

添加HADOOP_HOME环境变量,修改 /etc/profile

1

export HADOOP_HOME=/opt/cloudera/parcels/CDH/lib/hadoop

USDP环境中,通过修改

/srv/.service_env文件来做修改。不过,此文件要做修改,最后的echo ${SERVICE_BIN}要注释掉。并且需要修改/srv/.set_profile。/srv/.service_env文件内容:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24export UDP_SERVICE_PATH=/srv/udp/2.0.0.0

# HOME

export FLINK_HOME=${UDP_SERVICE_PATH}/flink

export FLUME_HOME=${UDP_SERVICE_PATH}/flume

export HIVE_HOME=${UDP_SERVICE_PATH}/hive

export IMPALA_HOME=${UDP_SERVICE_PATH}/impala

export KYLIN_HOME=${UDP_SERVICE_PATH}/kylin

export LIVY_HOME=${UDP_SERVICE_PATH}/livy

export PHOENIX_HOME=${UDP_SERVICE_PATH}/phoenix

export PRESTO_HOME=${UDP_SERVICE_PATH}/presto

export TRINO_HOME=${UDP_SERVICE_PATH}/trino

export SPARK_HOME=${UDP_SERVICE_PATH}/spark

export SQOOP_HOME=${UDP_SERVICE_PATH}/sqoop

export DATAX_HOME=${UDP_SERVICE_PATH}/datax

export TEZ_HOME=${UDP_SERVICE_PATH}/tez

export YARN_HOME=${UDP_SERVICE_PATH}/yarn

export HADOOP_HOME=${UDP_SERVICE_PATH}/yarn

。。。 此处省略了其他配置

export SERVICE_BIN=${FLINK_BIN}:${FLUME_BIN}:${HIVE_BIN}:${IMPALA_BIN}:${KYLIN_BIN}:${LIVY_BIN}:${PHOENIX_BIN}:${PRESTO_BIN}:${SPARK_BIN}:${SQOOP_BIN}:${DATAX_BIN}:${TEZ_BIN}:${YARN_BIN}:${ELASTICSEARCH_BIN}:${HBASE_BIN}:${HDFS_BIN}:${KAFKA_BIN}:${KUDU_BIN}:${ZOOKEEPER_BIN}:${HUE_BIN}:${KAFKAEAGLE_BIN}:${KIBANA_BIN}:${ZEPPELIN_BIN}:${ZKUI_BIN}:${AIRFLOW_BIN}:${OOZIE_BIN}:${UDS_BIN}:${DOLPHINSCHEDULER_BIN}:${RANGER_BIN}:${ATLAS_BIN}:${TRINO_BIN}:${NEO4J_BIN}

#echo ${SERVICE_BIN}/srv/.set_profile文件内容:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

LINE0='source /srv/.service_env'

#LINE1='SERVICE_BIN=`/bin/bash /srv/.service_env`'

LINE2='export PATH=${SERVICE_BIN}:$PATH'

# /etc/profile

echo ${LINE0} >> /etc/profile

#echo ${LINE1} >> /etc/profile

echo ${LINE2} >> /etc/profile

#/root/.bashrc

echo ${LINE0} >> /root/.bashrc

#echo ${LINE1} >> /root/.bashrc

echo ${LINE2} >> /root/.bashrc

#/root/.bash_profile

echo ${LINE0} >> /root/.bash_profile

#echo ${LINE1} >> /root/.bash_profile

echo ${LINE2} >> /root/.bash_profile

#/home/hadoop/.bashrc

echo ${LINE0} >> /home/hadoop/.bashrc

#echo ${LINE1} >> /home/hadoop/.bashrc

echo ${LINE2} >> /home/hadoop/.bashrc

#/home/hadoop/.bash_profile

echo ${LINE0} >> /home/hadoop/.bash_profile

#echo ${LINE1} >> /home/hadoop/.bash_profile

echo ${LINE2} >> /home/hadoop/.bash_profile -

方案三

auto.sh 里加一行1

export HADOOP_HOME=/opt/cloudera/parcels/CDH/lib/hadoop

2.出现 commons-cli 异常报错,需要在如下路径放入 commons-cli 依赖

解决办法:

下载common-cli包,需要在如下路径放置:

- Flink 的 lib

- dinky 的 plugins

- HDFS 的 /flink/lib/

3.依赖冲突

解决办法:

如果添加 flink-shade-hadoop-uber-3 包后,请手动删除该包内部的javax.servlet 等冲突内容

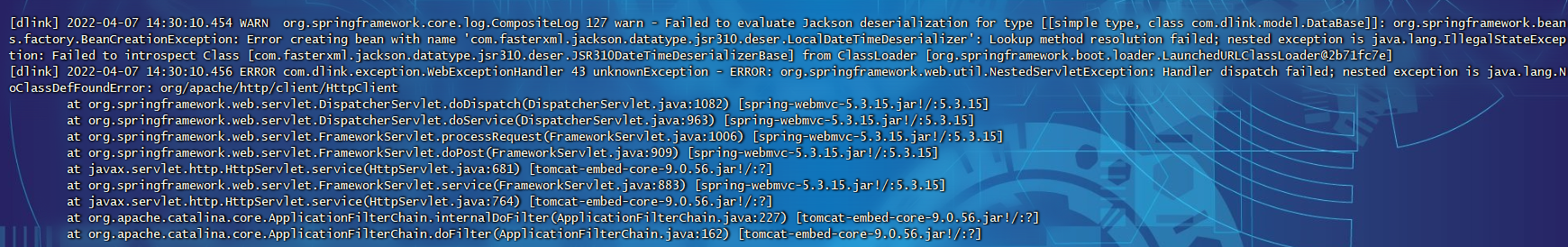

4.连接hive异常

1 | Caused by: java.lang.ClassNotFoundException: org.apache.http.client.HttpClient |

解决办法:

在plugins下添加以下包

1 | httpclient-4.5.3.jar |

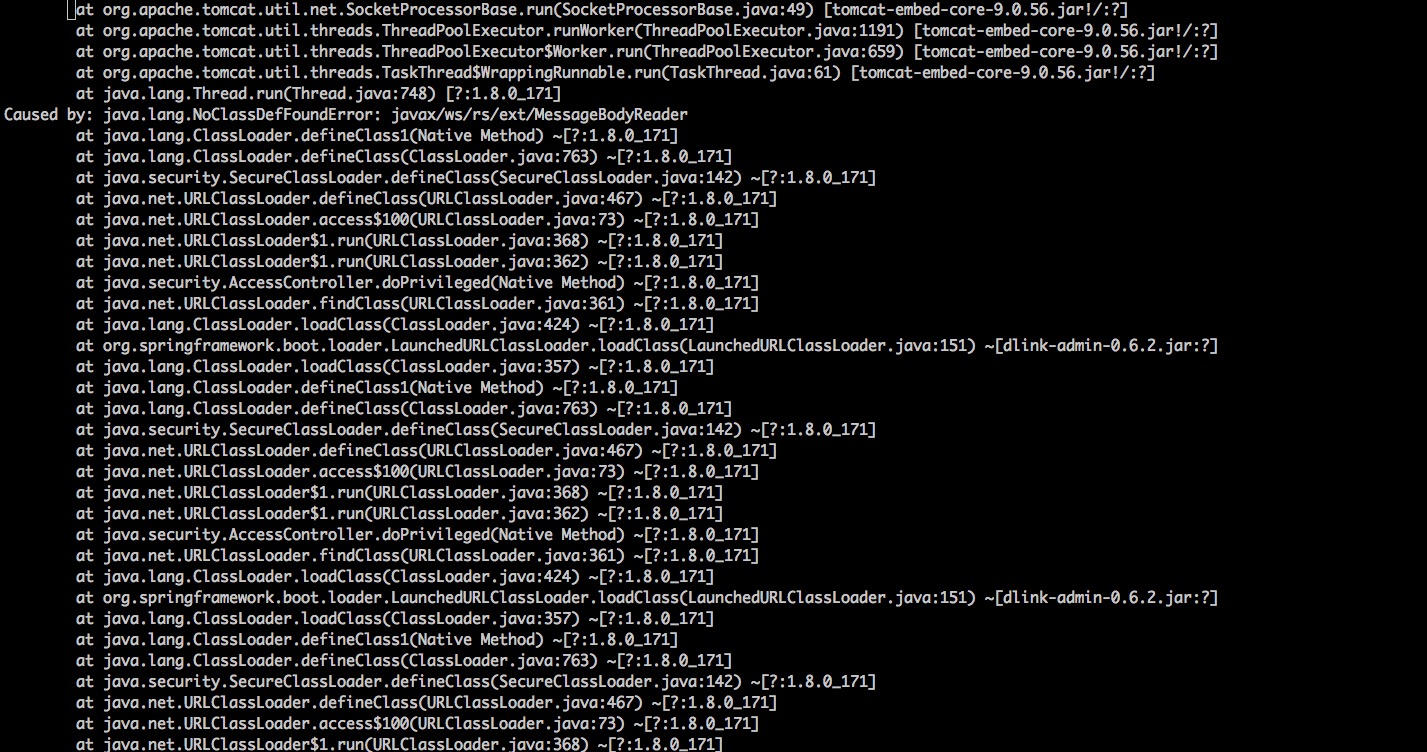

5.找不到javax/ws/rs/ext/MessageBodyReader

解决办法:

在plugins下添加以下包

1 | javax.ws.rs-api-2.0.jar |

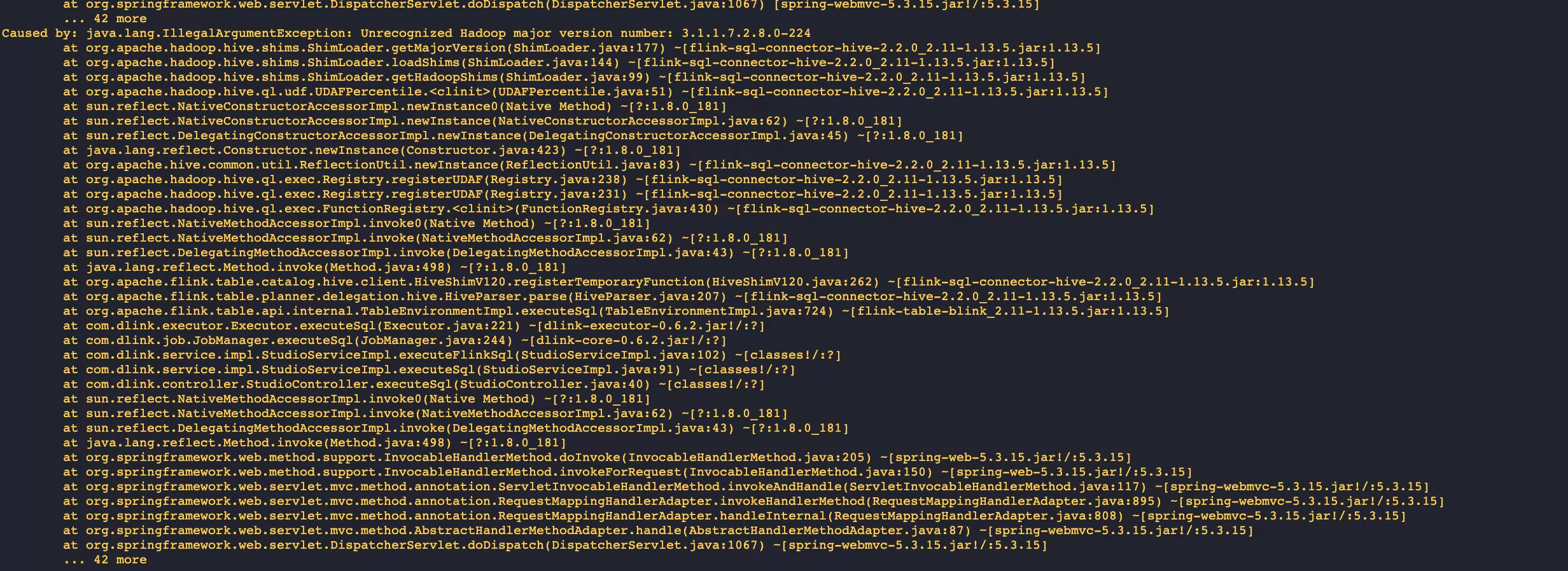

6.在 Flink 中,如果与 CDH 集成并使用 HiveCatalog,必须要从新编译对应 Flink 版本的源码,在使用中如果不编译,会报如下错误:

解决办法:

1.首先下载 Flink 对应版本源码,并切换到对应 hive 版本flink-sql-connector目录下

2.修改pom,添加如下信息

1 | #添加cloudera 仓库 |

编译成功后,将对应的jar包拿出来即可,分别放到 flink/lib和dinky/plugins下。重启 Flink 和 Dlinky 即可。

7.找不到java.lang.NoClassDefFoundError: com/sun/jersey/core/util/FeaturesAndProperties

1 | 报错:ERROR org.apache.flink.yarn.cli.FlinkYarnSessionCli [] - Error while running the Flink session. |

缺少 jersey-server-1.9.jar, jersey-core-1.9.jar,jersey-server-1.9.jar这三个jar包,下载这个包放到Flink/lib/目录下。

或者下载flink-shaded-guava-31.1-jre-17.0.jar 也可以解决。

8.内存配置问题

1 | org.apache.flink.configuration.IllegalConfigurationException: TaskManager memory configuration failed: Derived JVM Overhead size (1.750gb (1879048192 bytes)) is not in configured JVM Overhead range [192.000mb (201326592 bytes), 1024.000mb (1073741824 bytes)]. |

解决办法:检查自己的flink-conf.yaml这个配置文件中

1 | jobmanager.memory.process.size |

这两个参数和自己启动yarn-session的-jm -tm这两个参数的大小关系,配置文件中的总内存大小需要大于启动参数的 -tm大小+256M

注:

| 配置项 | TaskManager 配置参数 | JobManager 配置参数 |

|---|---|---|

| Flink 总内存 | taskmanager.memory.flink.size | jobmanager.memory.flink.size |

| 进程总内存 | taskmanager.memory.process.size | jobmanager.memory.process.size |

这两个内存配置存在冲突,配置文件建议不要一起配置。